At EdgeMachines we develop cutting edge end-to-end IoT & edge computing systems for the modern world using open tools, protocols and standards. Our hardware and software platform allows us to deploy low-power devices in the real-world without the need for expensive, high-bandwidth infrastructure. Using our AI models and FPGA & ASIC Accelerators we can create advanced IoT systems that gather complex data from the real world. From harsh industrial environments to remote national parks find our how our AIoT Platform can monitor your world.

Thanks to StreetNet’s low power design it is capable of proving smart object detection & tracking while still meeting the limited power requirements of the Zhaga Book18 standard. StreetNet sensors don’t need additional batteries or external power sources (e.g. solar), greatly reducing ongoing maintenance costs while still allowing the sensor to operate 24/7.

Zhaga Socket Compatible

Capture a wealth of information previously unavailable in traditional smart cities sensors. Traditional traffic sensors using pressure, motion sensors, radar or lidar are generally great at detecting moving objects however they have a poor understanding of the static environment around them. Using embedded Computer Vision, StreetNet enables a broader range of sensing outputs suited to the varied street lighting applications including roadways, parks, parking lots, and school, University & corporate campuses. Simplify your IoT stack by investing in one platform that can be across your varied fleet of lighting assets.

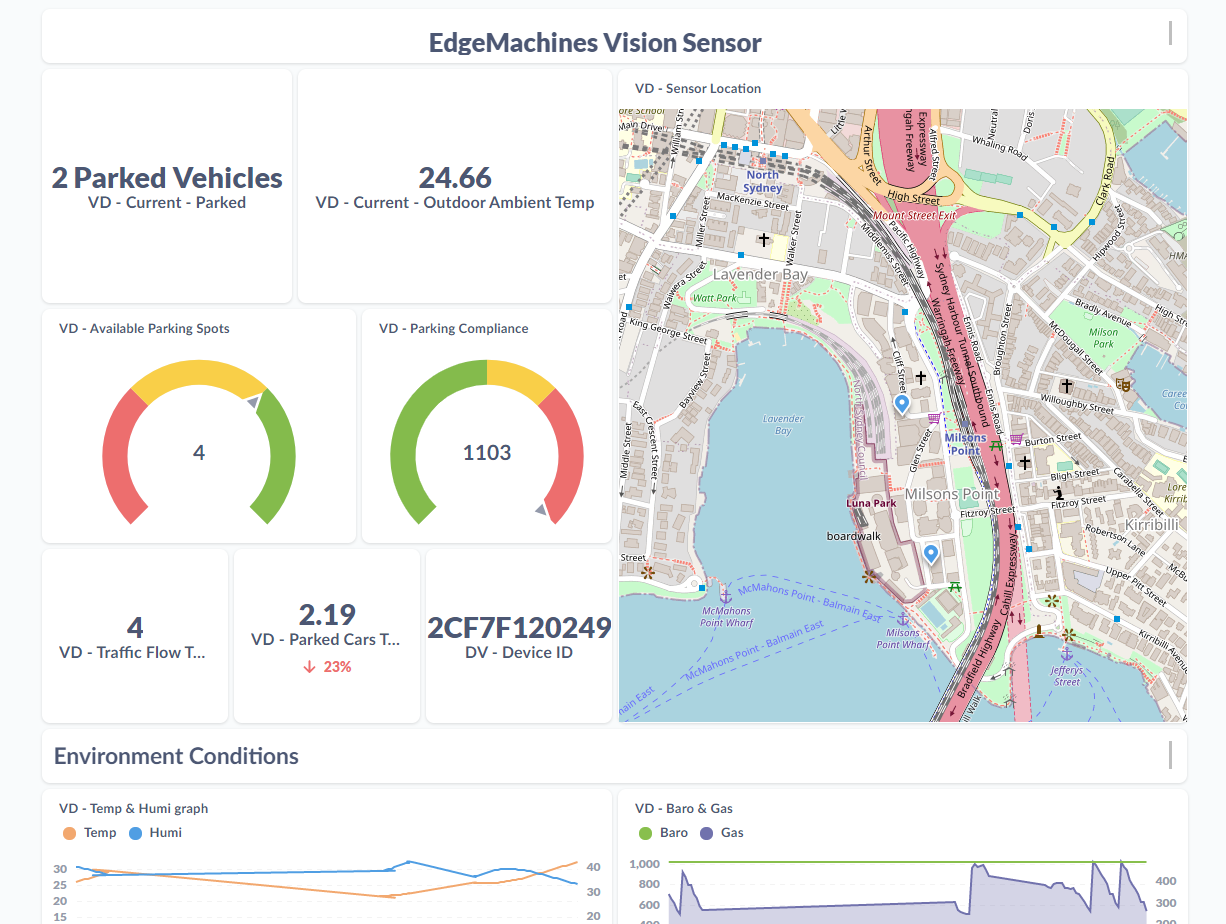

From traffic & cycleway monitoring to smart parking lots & curb side parking our sensor can provide a wealth of data streamed directly to the StreetNet dashboard & app or accessed via open API.

Environmental Sensors

Smarter waste management

Energy Efficiency

Thanks to StreetNet’s low power design it is capable of proving smart object detection & tracking while still meeting the limited power requirements of the Zhaga Book18 standard. StreetNet sensors don’t need additional batteries or external power sources (e.g. solar), greatly reducing ongoing maintenance costs while still allowing the sensor to operate 24/7.

Zhaga Socket Compatible

Capture a wealth of information previously unavailable in traditional smart cities sensors. Traditional traffic sensors using pressure, motion sensors, radar or lidar are generally great at detecting moving objects however they have a poor understanding of the static environment around them. Using embedded Computer Vision, StreetNet enables a broader range of sensing outputs suited to the varied street lighting applications including roadways, parks, parking lots, and school, University & corporate campuses. Simplify your IoT stack by investing in one platform that can be across your varied fleet of lighting assets.

From traffic & cycleway monitoring to smart parking lots & curb side parking our sensor can provide a wealth of data streamed directly to the StreetNet dashboard & app or accessed via open API.

Environmental Sensors

Smarter waste management

Energy Efficiency

Beyond simply monitoring noise levels in decibels, sound sensing uses our embedded AI hardware to continually process & classify sounds on the device. Because the device does all the processing, no audio data is ever recorded or transmitted off the device.

Impulse Sounds

Conservation & Protection

Classify environmental sounds to detect traffic hooning cars chainsaws gun shots crashes barking dogs yelling sirens construction fighting wildlife

Optional depth vision

Hear everything

Embedded AI Acceleration

Exceptionally Power Efficient

Lower is better

* up to 3.5W when using spatial AI

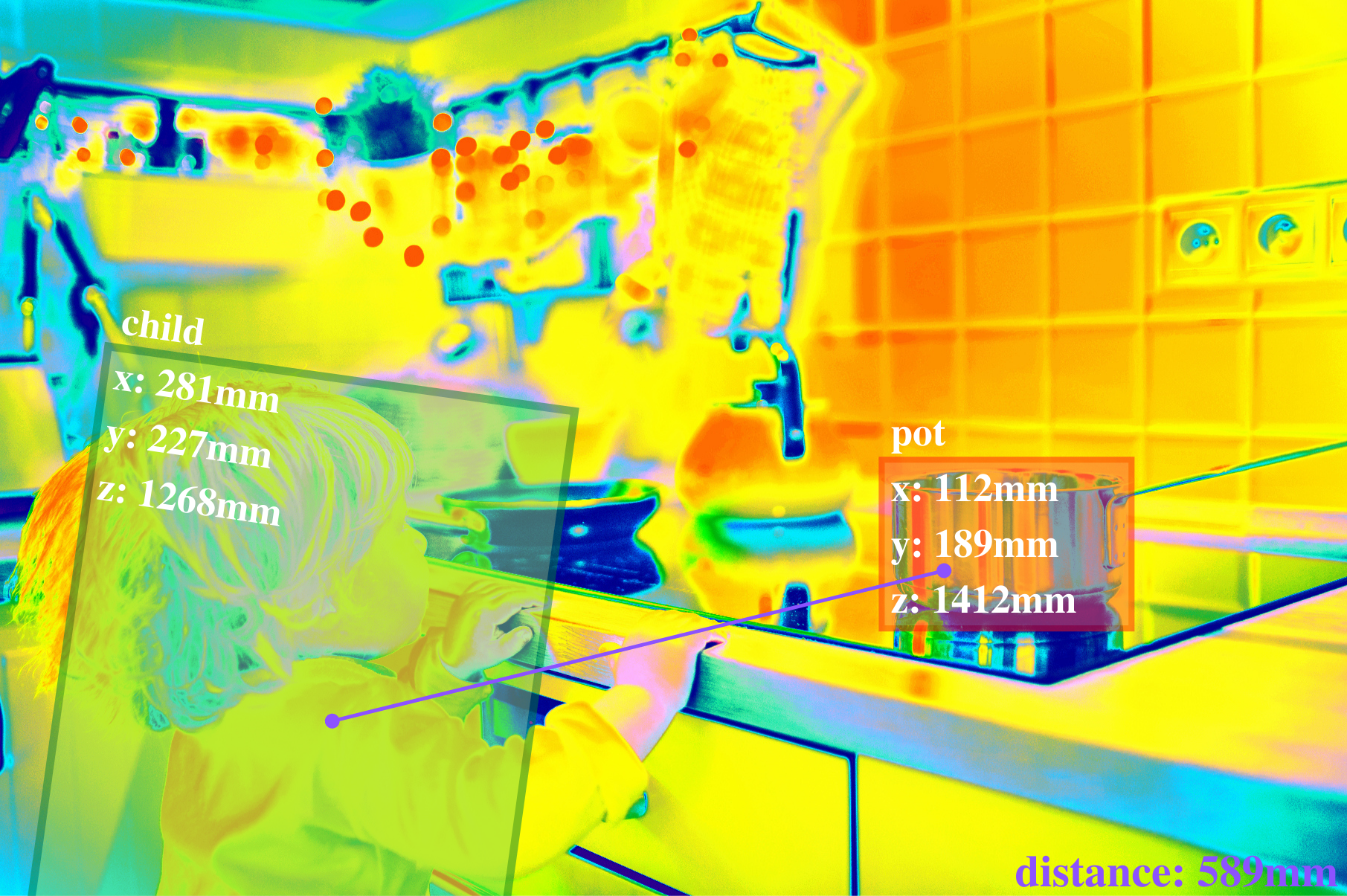

Real-time identification of hazards in the workplace and home settings can be performed on device. Stero Vision allows our sensors to understand depth within a scene.

Stero Vision

Human-Machine Safety

We focus on developing products & projects that use open APIs, putting data ownership in the hands of our clients, reducing installation & processing costs, increasing ease of integration and reducing lock-in risks.

We are now entering the age of edge computing, with devices becoming more intelligent and users become more privacy conscious, edge computing allows us to deploy cloud-processing-free sensor solutions. Performing computation & inference on the device at the edge, means devices require less. Our sensors are design to operate over low bandwidth, low power communication networks (LP-WANs) and are faster to deploy.

Privacy first design

Open protocols

By leveraging our base embedded device platform along with our expertise in data science, computer vision, machine learning and edge computing we can provide unique, practical solutions to previously infeasible or uneconomic sensing and data challenges.